Safety10 May 2024

Partnering with our industry to advance AI transparency and literacy

By: Adam Presser, Head of Operations & Trust and Safety

Today, we're sharing updates on our continued efforts to help creators safely and responsibly express their creativity with AI-generated content (AIGC). TikTok is starting to automatically label AI-generated content (AIGC) when it's uploaded from certain other platforms. To do this, we're partnering with the Coalition for Content Provenance and Authenticity (C2PA) and becoming the first video sharing platform to implement their Content Credentials technology. To help our community navigate AIGC and misinformation online, we're also launching new media literacy resources, which we developed with guidance from experts including Mediawise.

Investing in transparency as AI evolves

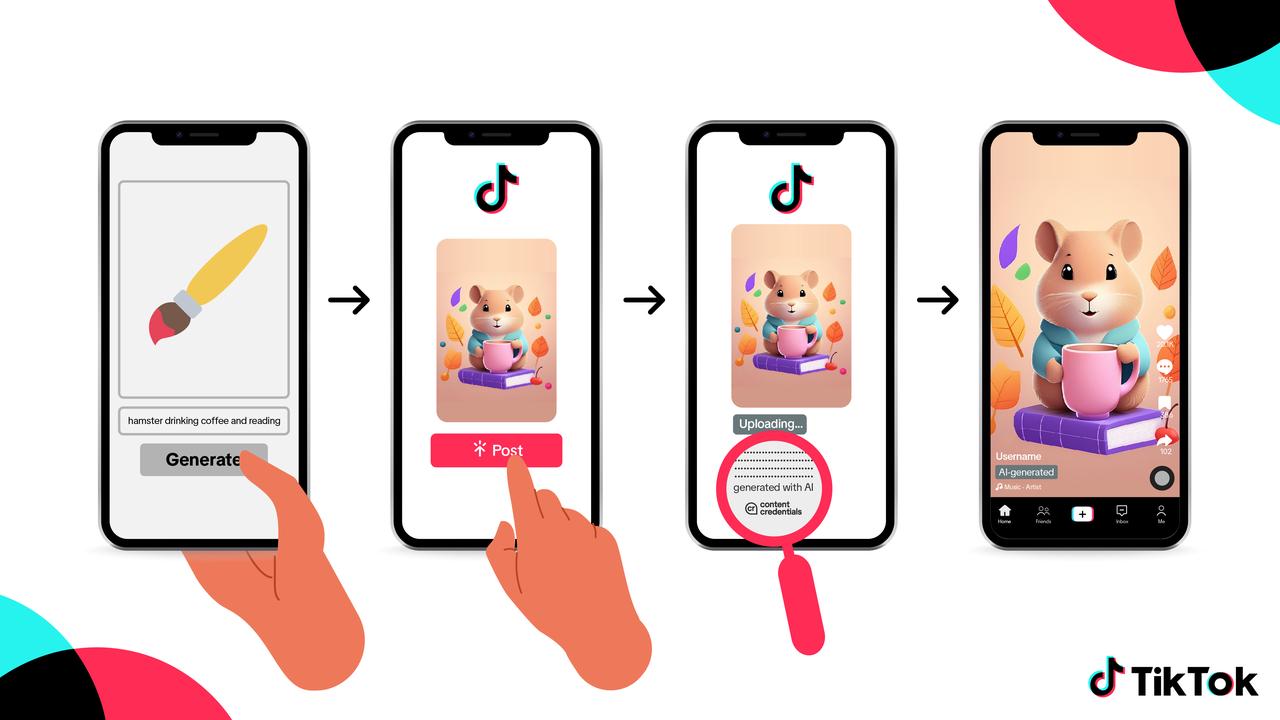

AI enables incredible creative opportunities, but can confuse or mislead viewers if they don't know content was AI-generated. Labeling helps make that context clear—which is why we label AIGC made with TikTok AI effects, and have required creators to label realistic AIGC for over a year. We also built a first-of-its-kind tool to make this easy to do, which over 37 million creators have used since last fall.

Partnering with our industry to label AIGC

Starting today, we're expanding auto-labeling to AIGC created on some other platforms by launching the ability to read Content Credentials, a technology from the Coalition for Content Provenance and Authenticity (C2PA). Content Credentials attach metadata to content, which we can use to instantly recognize and label AIGC. This capability started rolling out today on images and videos, and will be coming to audio-only content soon.

Over the coming months, we'll also start attaching Content Credentials to TikTok content, which will remain on content when downloaded. That means that anyone will be able to use C2PA's Verify tool to help identify AIGC that was made on TikTok and even learn when, where and how the content was made or edited. Other platforms that adopt Content Credentials will be able to automatically label it.

Driving industry adoption

To help drive Content Credentials adoption within our industry, we're also joining the Adobe-led Content Authenticity Initiative (CAI). TikTok is the first video sharing platform to put Content Credentials into practice. This means that the increase in auto-labeled AIGC on TikTok may be gradual at first, since it needs to have the Content Credentials metadata for us to identify and label it. However, as other platforms also implement it, we'll be able to label more content.

“With TikTok’s vast community of creators and users globally, we are thrilled to welcome them to both the C2PA and CAI as they embark on the journey to provide more transparency and authenticity on the platform. At a time when any digital content can be altered, it is essential to provide ways for the public to discern what is true. Today’s announcement is a critical step towards achieving that outcome.” - Dana Rao, General Counsel and Chief Trust Officer, Adobe

Advancing media literacy

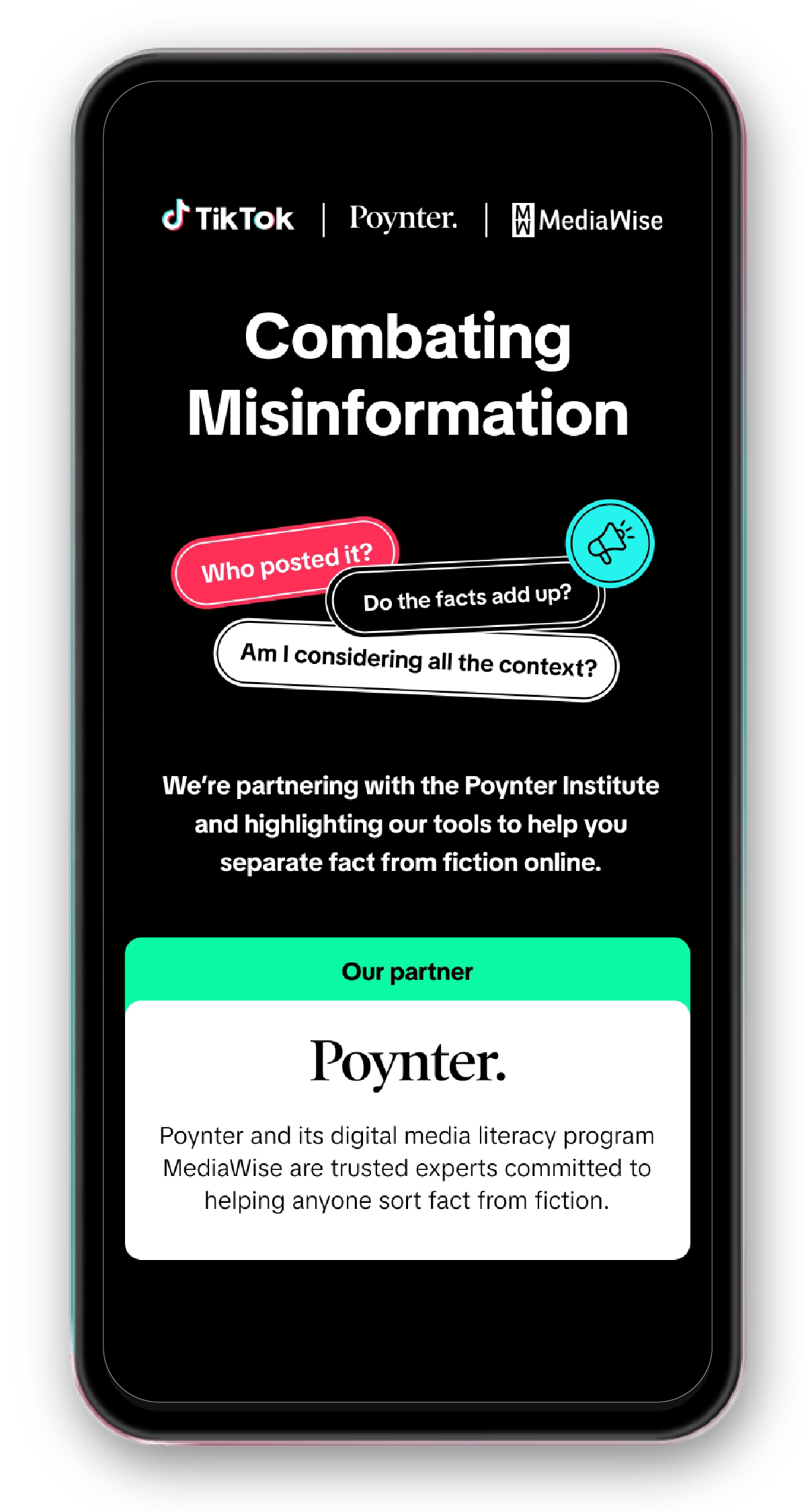

While experts widely recommend AIGC labeling as a way to support responsible content creation, they also caution that labels can cause confusion if viewers don't have context about what they mean. That's why we've been working with experts to develop media literacy campaigns that can help our community identify and think critically about AIGC and misinformation.

Working with MediaWise, a program of the Poynter Institute, we'll be updating our new Safety Center page on misinformation with tips that highlight universal media literacy skills while explaining how TikTok tools like AIGC labels can further contextualize content.

"Our Teen Fact-Checking Network has built an audience with innovative media literacy videos on TikTok since 2019. Five years later, we're thrilled to empower even more people to separate fact from fiction online.” — Alex Mahadevan, Director, MediaWise

Continuing to crack down on harmful content

While most people want to enjoy AI-generated content responsibly, there will be bad actors who use AIGC to intentionally deceive others. We're vigilant against those risks, which is why our policies firmly prohibit harmfully misleading AI-generated content—whether it's labeled or not—and signed an industry pact this year to combat the use of deceptive AI in elections. As AI evolves, we continue to invest in combatting harmful AIGC by evolving our proactive detection models, consulting with experts, and partnering with peers on shared solutions. Learn more on how in our Transparency Center.

Safety10 May 2024

Singapore