As more creators take advantage of Artificial Intelligence (AI) to enhance their creativity, we want to support transparent and responsible content creation practices. As part of that effort, we continue to invest in media literacy to empower creativity while giving people important context about content they're viewing. This week, we will begin launching a new tool to help creators label their AI-generated content. We'll also start testing ways to label AI-generated content automatically.

Empowering creators

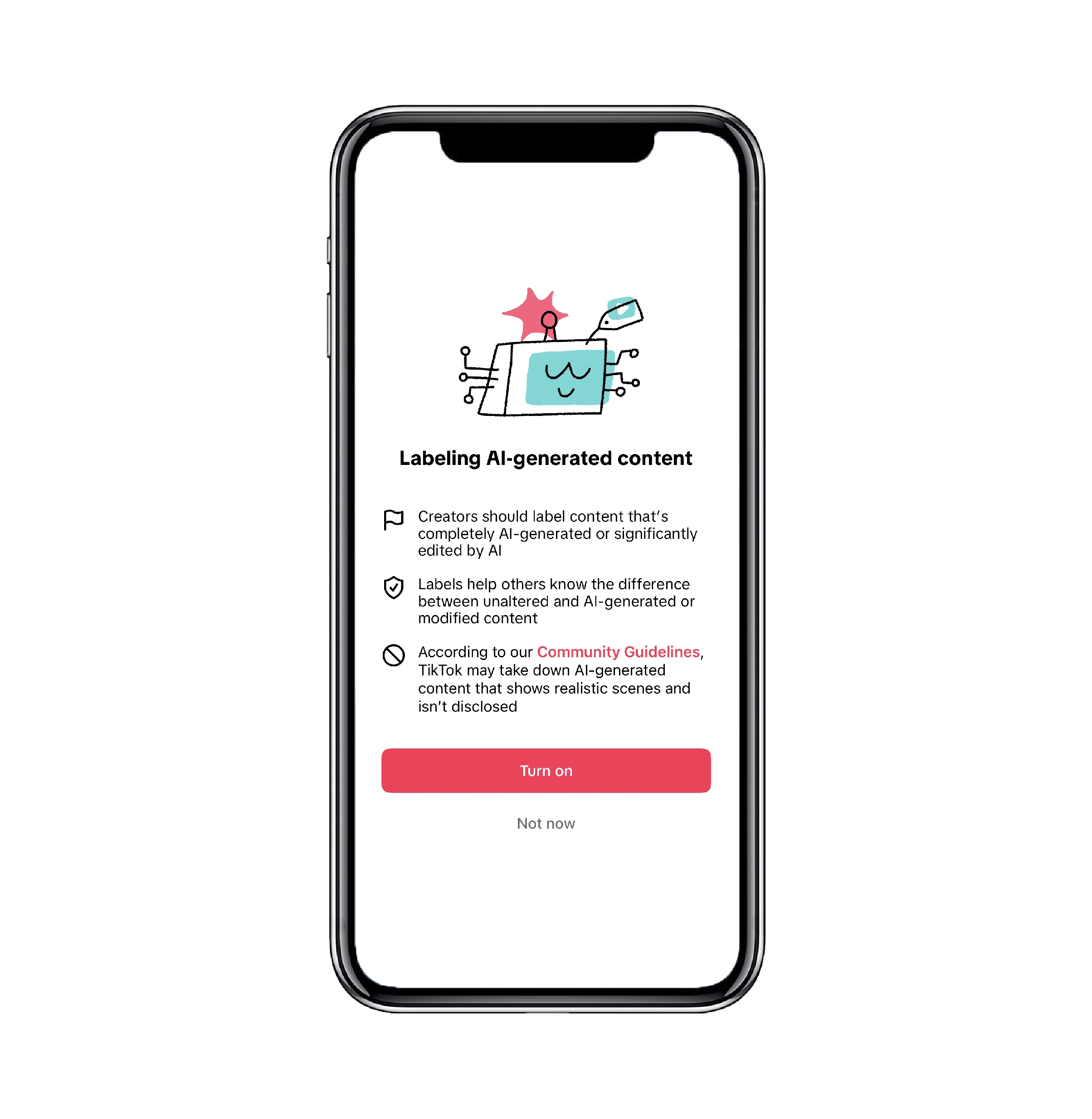

AI enables incredible creative opportunities, but can potentially confuse or mislead viewers if they're not aware content was generated or edited with AI. Labeling content helps address this, by making clear to viewers when content is significantly altered or modified by AI technology. That's why we're rolling out a new tool for creators to easily inform their community when they post AI-generated content.

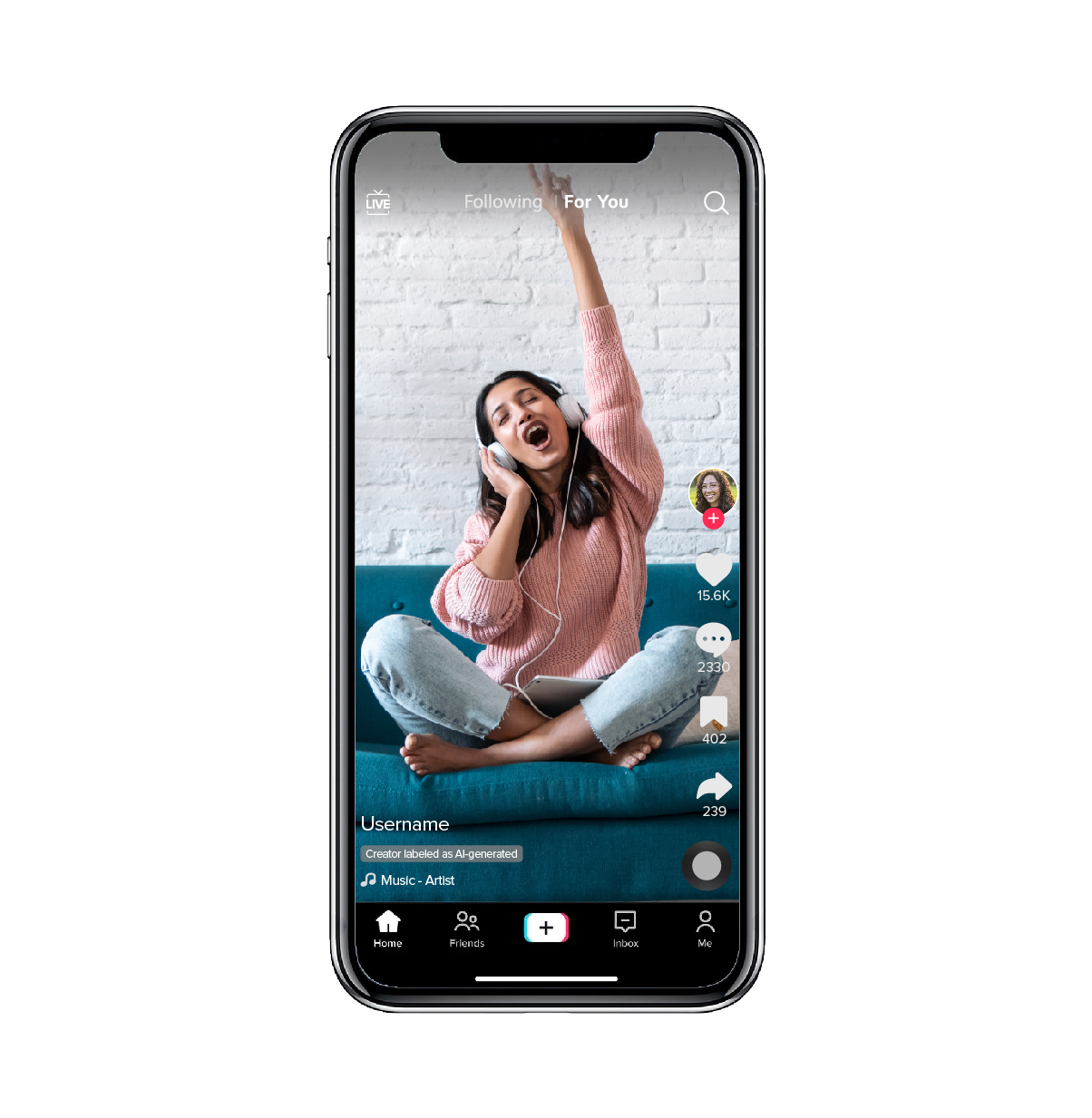

The new label will help creators showcase the innovations behind their content, and they can apply it to any content that has been completely generated or significantly edited by AI. It will also make it easier to comply with our Community Guidelines' synthetic media policy, which we introduced earlier this year. The policy requires people to label AI-generated content that contains realistic images, audio or video, in order to help viewers contextualize the video and prevent the potential spread of misleading content. Creators can now do this through the new label (or other types of disclosures, like a sticker or caption).

To help our community learn how and why to use these labels, we're releasing educational videos and resources throughout the coming weeks. Over time, we hope they will become another tool that creators and viewers use to share and contextualize content, similar to verified account badges and branded content labels.

Testing automated AI-generated content labels

We are also iterating on the ways we automatically label AI-generated content. This week, we will begin testing an "AI-generated" label that we eventually plan to apply automatically to content that we detect was edited or created with AI. To drive more clarity around AI-powered TikTok products, we are also renaming all TikTok AI effects to explicitly include "AI" in their name and corresponding effects label, and updated our guidelines for Effect House creators to do the same.

Our goal with these efforts is to build on existing content disclosures—such as our TikTok effects labels—and find a clear, intuitive and nuanced way to keep our community informed about AI-generated content.

Partnering with experts

We consulted with our Safety Advisory Councils when developing these updates, as well as industry experts including MIT's Dr. David G. Rand, who is studying how viewers perceive different types of AI labels. Dr. Rand's research helped guide the design of our "AI-generated labels":

It's really important that platforms develop effective labeling policies. We have found that across different demographic groups globally, the term "AI generated" is widely understood as applying to content that is generated by AI.

We continue partnering closely with peers, experts and civil society across our industry, knowing that AI raises complicated questions that no one platform can solve alone. In February, we committed to the Partnership on AI's Responsible Practices for Synthetic Media, a code of industry best practices for AI transparency and responsible innovation. In August, we partnered with nonprofit Digital Moment to host roundtables where young community members shared their perspectives on the advancement of AI online.

Navigating AI as it evolves

AI-generated content is an exciting opportunity, and as it evolves, our approach will too. We will continue to iterate as we evaluate the impact of these updates and work with our community to safely navigate the recent advancements of AI-generated content together.